Artificial Intelligence (AI) has become an integral part of modern society, with its applications ranging from simple automation to complex decision-making processes. While AI has brought immense benefits to society, it has also raised several legal and ethical concerns. The use of AI has created new challenges for policymakers, corporate entities, and legal professionals.

The legal aspects of AI are complex and multifaceted. The use of AI in decision-making processes has raised concerns about bias, transparency, and accountability. The lack of transparency in AI algorithms has made it difficult to hold entities accountable for their actions. Additionally, the use of AI has raised concerns about data privacy, intellectual property rights, and liability.

As AI continues to evolve and become more sophisticated, it is essential to address the legal and ethical concerns that arise from its use. The legal framework surrounding AI is still in its infancy, and policymakers and legal professionals must work together to develop regulations that balance innovation and societal values. In this article, we will explore the legal aspects of AI and the challenges that arise from its use. We will also examine the current legal framework and the steps that policymakers and legal professionals can take to address the legal and ethical concerns surrounding AI.

Overview of AI

Artificial Intelligence (AI) is a branch of computer science that involves developing machines that can perform tasks that typically require human intelligence, such as learning, reasoning, problem-solving, decision-making, and perception. AI is an umbrella term that encompasses a wide range of technologies and applications, including machine learning, natural language processing, computer vision, robotics, and expert systems.

What is AI?

AI is a field of study that focuses on creating intelligent machines that can perform tasks that typically require human intelligence. AI systems are designed to learn from experience, adapt to new situations, and make decisions based on data. AI is often used to automate tasks that are difficult, time-consuming, or impossible for humans to perform, such as analyzing large amounts of data, detecting fraud, or diagnosing diseases.

Types of AI

There are several types of AI, each with its own strengths and weaknesses. Some of the most common types of AI include:

- Reactive Machines: These are the simplest form of AI, and they do not have the ability to learn from experience. They can only react to specific situations based on pre-programmed rules.

- Limited Memory: These AI systems can learn from experience, but they only have access to a limited amount of data. They can use this data to make decisions, but they cannot learn from new data that is not in their memory.

- Theory of Mind: These AI systems have the ability to understand the mental states of other entities, such as humans or animals. They can use this understanding to predict behavior and make decisions.

- Self-Aware: These AI systems have a sense of self-awareness and can understand their own existence. They can use this understanding to learn from experience and make decisions based on that learning.

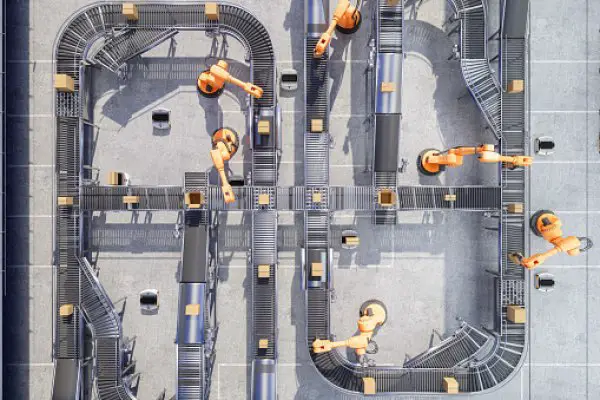

AI is becoming increasingly important in many industries, including healthcare, finance, and manufacturing. However, as AI becomes more advanced, it also raises a number of legal, ethical, and social issues that need to be addressed.

Legal Framework for AI

Artificial Intelligence (AI) is a rapidly evolving technology that has the potential to transform various industries. However, as AI becomes more prevalent, it raises complex legal issues that require attention from policymakers, regulators, and legal professionals. In this section, we will look at the current legal landscape and the regulatory framework for AI.

Current Legal Landscape

The legal landscape for AI is complex and multifaceted. Many traditional legal doctrines and statutes of general application could answer the issues posed by AI or at least provide the starting point for responding to those issues.

For instance, tort law provides a framework for addressing harm caused by AI systems. In-house lawyers need to understand the potential risks and liabilities associated with AI systems and take appropriate steps to mitigate them. They also need to ensure that their organizations comply with applicable laws and regulations when using AI.

Regulatory Framework

Regulators around the world are grappling with how to regulate AI. The regulatory framework for AI varies by jurisdiction, and there is no one-size-fits-all approach.

For example, the European Union (EU) is leading the way in AI regulation. In 2020, the EU released a white paper titled "On Artificial Intelligence—A European Approach to Excellence and Trust," which outlined its vision for AI regulation. In 2021, the EU proposed an AI legal framework that aims to ensure that AI is used in a way that is safe, transparent, and respects fundamental rights.

In the United States, there is no comprehensive federal AI regulation. However, there are laws and regulations that govern specific aspects of AI. For example, the Fair Credit Reporting Act (FCRA) regulates automated decision-making, and the Illinois Artificial Intelligence Video Interview Act (820 ILCS 42/1) regulates the use of AI in video interviews.

Overall, the legal framework for AI is still evolving, and legal professionals need to keep up with the latest developments in this area to ensure that their organizations comply with applicable laws and regulations and mitigate potential risks and liabilities associated with AI systems.

Data Privacy and AI

As AI technology advances, data privacy has become a major concern for individuals and organizations alike. This section will discuss two important subtopics related to data privacy and AI: Personal Data Protection and Data Ownership.

Personal Data Protection

AI systems often rely on personal data to learn and improve their performance. However, the use of personal data in AI systems raises concerns about privacy and data protection. To address these concerns, many countries have enacted laws and regulations that require organizations to protect personal data.

In the European Union, the General Data Protection Regulation (GDPR) sets out strict rules for the collection, use, and storage of personal data. The GDPR requires organizations to obtain explicit consent from individuals before collecting their personal data and to provide individuals with the right to access, correct, and delete their personal data.

Similarly, in the United States, the California Consumer Privacy Act (CCPA) requires organizations to provide consumers with notice of the personal information they collect and the purposes for which it will be used. The CCPA also gives consumers the right to request that their personal information be deleted and prohibits organizations from selling personal information without the consumer's consent.

Data Ownership

Another important issue related to data privacy and AI is data ownership. AI systems often generate insights and predictions based on data that is collected from individuals or organizations. This raises questions about who owns the data and who has the right to use it.

In many cases, the ownership of data is determined by the terms of a contract or agreement. For example, if an individual agrees to share their personal data with a company in exchange for a service, the company may own the data and have the right to use it for their own purposes.

However, in some cases, the ownership of data may be unclear or disputed. For example, if an AI system generates insights based on data that was collected from multiple sources, it may be difficult to determine who owns the resulting insights.

To address these issues, some experts have proposed the development of a new legal framework for data ownership and use. This framework would establish clear rules for the ownership and use of data in AI systems and would help to ensure that individuals and organizations are treated fairly.

In conclusion, data privacy and ownership are important issues that must be addressed as AI technology continues to advance. By implementing strong data protection laws and developing a clear legal framework for data ownership and use, organizations can help to ensure that AI systems are used in a responsible and ethical manner.

Liability Issues in AI

As AI continues to advance and become more integrated into various industries, the issue of liability becomes increasingly important. Who is responsible when an AI system makes a mistake or causes harm? This section will explore the liability issues surrounding AI, including who may be liable and the role of tort law.

Who is Liable?

Determining liability in AI-related incidents can be complex. In some cases, it may be straightforward – for example, if a manufacturer produces a faulty AI system that causes harm, they may be held liable. However, in other cases, there may be multiple parties involved, including the AI system itself, the developer, the user, and any third-party data providers.

In-house lawyers may play a crucial role in determining liability in AI-related incidents. They can help their organizations assess the risks associated with AI systems and develop appropriate risk management strategies. This may include reviewing contracts with third-party data providers, ensuring compliance with relevant regulations, and developing internal policies and procedures for using AI systems.

Tort Law and AI

Tort law is an area of law that deals with civil wrongs, including those that result in harm or injury to another person. In the context of AI, tort law may be used to hold individuals or organizations liable for harm caused by an AI system.

One challenge with applying tort law to AI is that traditional tort law principles may not always fit neatly with the unique characteristics of AI systems. For example, it may be difficult to establish the level of care that an AI system should be held to, or to determine whether an AI system acted negligently.

To address these challenges, some legal experts have proposed developing new legal frameworks specifically for AI. This may include creating new types of liability, such as strict liability for AI systems, or establishing new legal standards for AI-related incidents.

In conclusion, liability is a critical issue in the development and use of AI systems. In-house lawyers can play an important role in helping their organizations manage the risks associated with AI, while tort law will continue to evolve to address the unique challenges posed by AI-related incidents.

AI and Intellectual Property

As AI technology continues to rapidly evolve, legal practitioners are facing new challenges in protecting intellectual property rights. This section will explore two main areas of intellectual property law that are relevant to AI: patentability and copyright protection.

Patentability of AI

One of the main issues with AI and patent law is determining who can be named as the inventor of a patentable invention. In the United States, the Patent Act defines an inventor as an individual who "conceives" of a patentable invention. However, with AI, it is often difficult to determine who the "conceiver" of the invention is.

In 2020, the US Patent and Trademark Office (USPTO) issued new guidelines stating that an AI system cannot be named as an inventor on a patent application. Instead, the guidelines state that the human owner or operator of the AI system should be named as the inventor. However, this guideline has been challenged in court and the issue is still being debated.

Copyright Protection for AI

Copyright law protects original works of authorship, including literary, artistic, and musical works. With AI-generated works, the question arises as to who should be considered the "author" of the work.

In the United States, the Copyright Act defines an author as "the person who creates the work." However, with AI-generated works, it is often difficult to determine who the "person" is. This has led to debates over whether AI-generated works can be protected by copyright law.

Currently, the US Copyright Office has issued guidelines stating that works created by AI are not eligible for copyright protection because they are not created by a human author. However, this guideline has also been challenged in court and the issue is still being debated.

In conclusion, AI technology is presenting new challenges for intellectual property law. As AI continues to evolve, legal practitioners will need to stay up-to-date on the latest developments in order to protect their clients' intellectual property rights.

AI and Employment Law

The rise of Artificial Intelligence (AI) has led to significant changes in the job market. While AI has created new job opportunities, it has also led to job displacement, and this has raised concerns about the impact of AI on employment law.

Impact on Employment

AI has the potential to automate many jobs, and this could lead to job losses in some sectors. According to a report by the Organisation for Economic Co-operation and Development (OECD), the adoption of AI may increase labour market disparities between workers who have the skills to use AI effectively and those who do not. This could lead to a widening of the skills gap and a reduction in the number of jobs available for workers without the necessary skills.

Employee Rights

AI has also raised concerns about the impact on employee rights. For example, the use of AI in hiring decisions could lead to discrimination against certain groups of people. Although no federal laws expressly regulate the use of AI in employment decisions, its use is likely subject to several statutes, particularly laws against discrimination. Employers must ensure that their use of AI does not result in discriminatory practices.

AI can also impact employee privacy rights. For example, the use of AI-powered surveillance systems could infringe on employee privacy. Employers must ensure that they comply with data protection laws and that they are transparent about the use of AI in the workplace.

In conclusion, the rise of AI has led to significant changes in the job market and has raised concerns about the impact on employment law. Employers must ensure that they comply with relevant laws and regulations and that they are transparent about the use of AI in the workplace.

Measuring AI Effectiveness

Artificial Intelligence (AI) has become an indispensable tool for businesses in a variety of industries. However, it is essential to measure its effectiveness to ensure that it is delivering the expected results. Measuring AI effectiveness is not a straightforward process, and there are several challenges that businesses face. This section will explore how to measure AI effectiveness and the challenges that come with it.

How to Measure AI Effectiveness

There are different ways to measure AI effectiveness, depending on the goals of the business. Here are some common metrics that businesses use to measure AI effectiveness:

- Accuracy: Accuracy is the most common metric used to measure AI effectiveness. It measures how often the AI system makes the correct decision or prediction. The higher the accuracy, the better the AI system is performing.

- Precision and Recall: Precision measures how often the AI system is correct when it predicts a positive outcome, while recall measures how often the AI system detects a positive outcome. These metrics are commonly used in applications such as fraud detection or medical diagnosis.

- Speed: Speed measures how quickly the AI system can process data and provide a response. This metric is crucial in applications such as customer service or chatbots where a quick response time is essential.

- Cost Savings: Cost savings is a metric that measures how much money the AI system saves the business. For example, an AI system that automates manual tasks can save a business money by reducing labor costs.

Challenges in Measuring AI Effectiveness

Measuring AI effectiveness is not without its challenges. Here are some of the challenges that businesses face:

- Data Quality: Data quality is essential for accurate AI predictions. If the data used to train the AI system is of poor quality, the AI system's accuracy will suffer.

- Bias: AI systems can be biased if they are trained on biased data. This bias can lead to inaccurate predictions or decisions that discriminate against certain groups.

- Interpretability: AI systems can be difficult to interpret, making it challenging to understand how they arrived at their predictions or decisions. This lack of interpretability can make it challenging to identify and correct errors.

- Human Oversight: Human oversight is necessary to ensure that the AI system is making the correct decisions. However, human oversight can be time-consuming and costly.

In conclusion, measuring AI effectiveness is essential for businesses to ensure that their AI systems are delivering the expected results. While there are challenges in measuring AI effectiveness, businesses can overcome them by using the right metrics and ensuring that their AI systems are trained on quality data.

Developing and Improving AI

Artificial Intelligence (AI) is a rapidly growing field that has the potential to revolutionize the way we live and work. However, the development and improvement of AI pose significant legal challenges. To ensure that AI is developed and improved in a responsible and ethical manner, it is crucial to understand the AI development and improvement process.

AI Development Process

AI development involves several stages, including data collection, data cleaning, data labeling, algorithm development, and model testing. During the data collection stage, developers collect large amounts of data that will be used to train the AI model. However, data collection raises important legal issues, such as privacy and data protection.

The data cleaning stage involves removing any errors or inconsistencies in the collected data, while the data labeling stage involves labeling the data to make it easier for the AI model to learn. Algorithm development involves creating the mathematical models that will be used to analyze the data and make predictions. Finally, model testing involves testing the AI model to ensure that it is accurate and reliable.

AI Improvement Process

Once the AI model has been developed, it is important to continually improve it to ensure that it remains accurate and up-to-date. AI improvement involves several stages, including data monitoring, model retraining, and algorithm refinement.

Data monitoring involves monitoring the data that is being fed into the AI model to ensure that it is accurate and up-to-date. Model retraining involves updating the AI model with new data to improve its accuracy, while algorithm refinement involves refining the mathematical models used by the AI model to make it more accurate and efficient.

To ensure that AI is developed and improved responsibly, it is important to consider legal issues such as data protection, privacy, and transparency. Developers must ensure that they are collecting and using data in a responsible and ethical manner, and that they are transparent about how the AI model is making decisions.

In conclusion, developing and improving AI is a complex process that requires careful consideration of legal issues. By understanding the AI development and improvement process, developers can ensure that AI is developed and improved in a responsible and ethical manner.

Content Liability and AI

AI and Content Liability

As AI systems become more prevalent in content creation and distribution, questions arise regarding who is responsible for the content generated by these systems. In some cases, AI systems may produce content that is harmful or offensive, leading to questions of liability for the content.

Under current laws, liability for content generally falls on the creator or publisher of the content. However, as AI systems become more sophisticated, it may become more difficult to determine who is responsible for the content generated by these systems.

Content Moderation and AI

AI systems are also being used for content moderation, which involves identifying and removing harmful or offensive content from online platforms. While AI systems can help automate the content moderation process, they are not foolproof and can sometimes make mistakes.

One challenge with using AI for content moderation is that it can be difficult to define what constitutes harmful or offensive content. AI systems may be trained on certain types of content, but they may not be able to accurately identify new types of harmful content that emerge over time.

Another challenge with using AI for content moderation is that it can be difficult to ensure that the AI system is not biased against certain groups or types of content. AI systems are only as good as the data they are trained on, and if that data is biased, the AI system may be biased as well.

To address these challenges, companies that use AI for content moderation must carefully monitor the performance of their AI systems and make adjustments as necessary. They must also ensure that their AI systems are trained on diverse and unbiased data to minimize the risk of bias.

Overall, as AI systems become more prevalent in content creation and moderation, it is important to consider the legal implications of these systems and to ensure that they are used responsibly.